High Availability (HA) in MySQL refers to a collection of options and solutions meant to keep the MySQL database system operational even during times of hardware failures, network troubles, or other unforeseen events. This is critical for applications that rely on consistent database availability to support business activities.

Key Components and Strategies for High Availability in MySQL:

Replication:

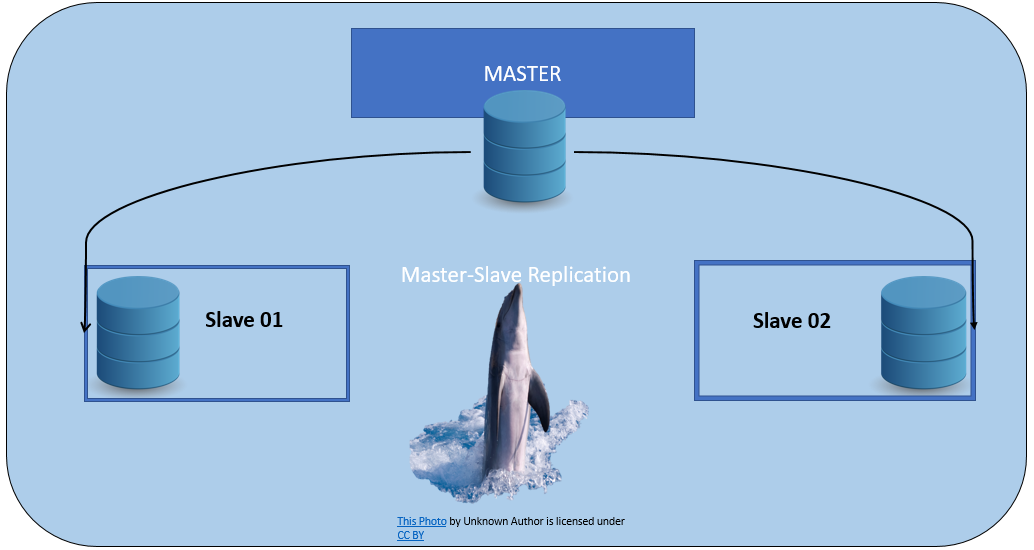

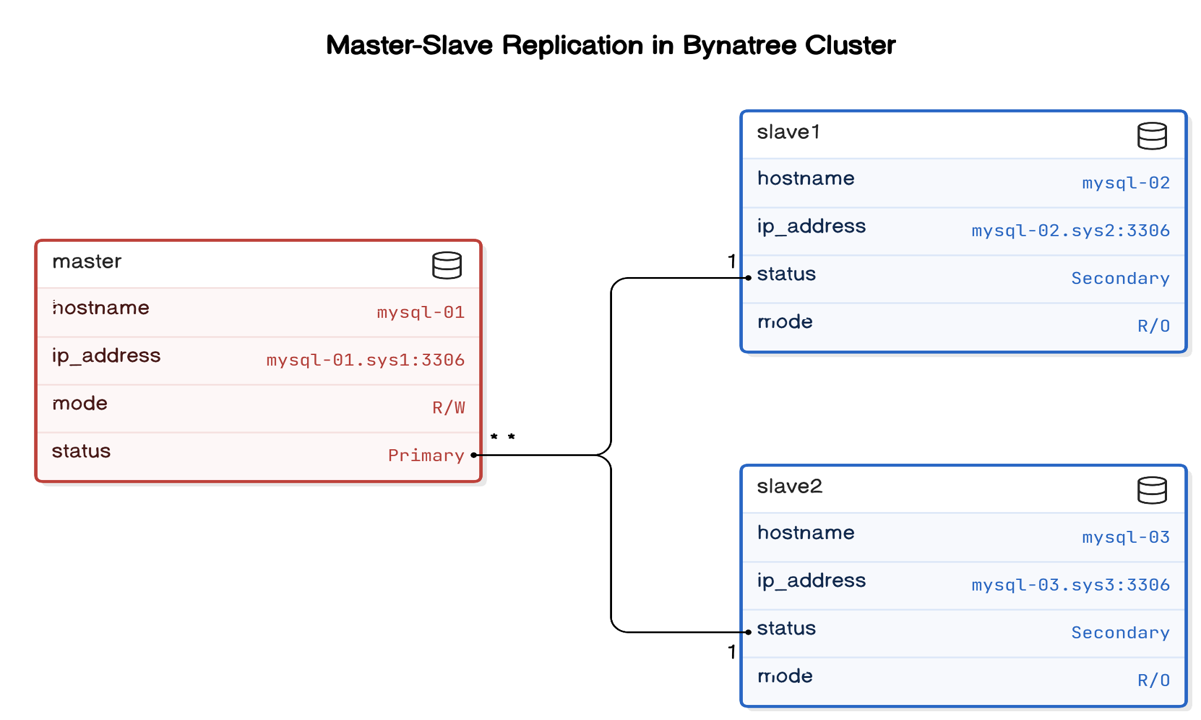

Master-Slave Replication: A single primary server (master) replicates data to one or more secondary machines (slaves). This configuration allows read requests to be split across slaves, improving speed and ensuring redundancy.

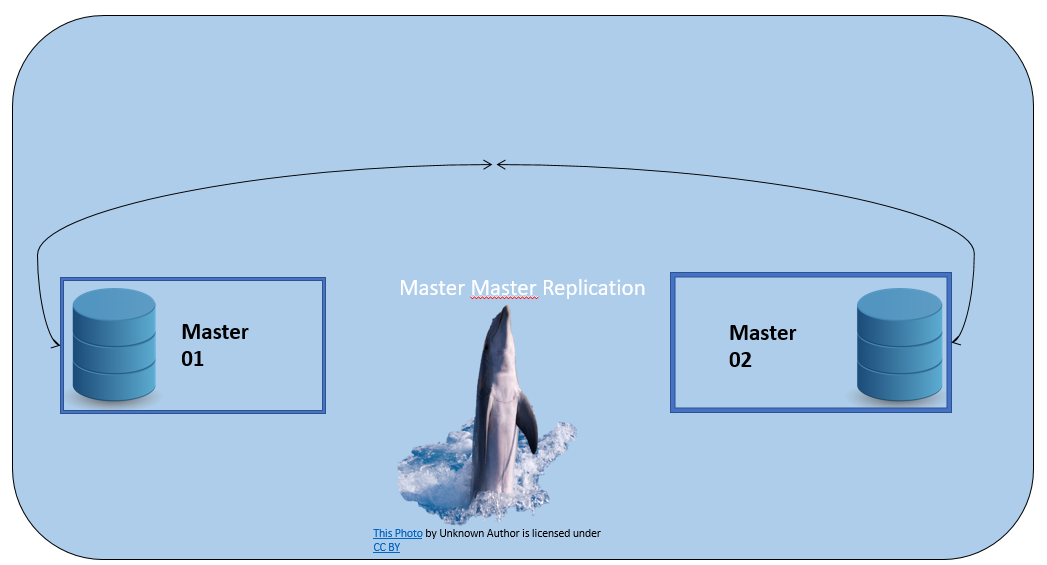

Master-Master Replication: This involves two or more servers replicating to each other. This arrangement provides improved failure tolerance and enables read-write(R/W) activities across several servers.

Master-Master Replication: This involves two or more servers replicating to each other. This arrangement provides improved failure tolerance and enables read-write(R/W) activities across several servers.

Clustering:

Clustering:

InnoDB Cluster: Uses Group Replication to build a high-availability configuration in which several MySQL instances work together as a cluster. It ensures that if any one of them fails, the other nodes keep functioning properly.

Automatic Failover:

MySQL InnoDB Cluster: This solution includes MySQL Router, which reroutes connections if a node fails, ensuring the database’s availability.

MySQL Replication Manager (MySQL MHA) is a utility that automates failover for MySQL replication installations, ensuring that one of the slave servers will be promoted to master if the master crashes.

Importance of High Availability in MySQL

- Operational stability: Ensures that important apps and services are always accessible, avoiding downtime that might cost you money or lose your customers’ confidence.

- Data integrity and redundancy: By replicating data across multiple machines, HA configurations prevent data loss and assure that data is consistent and correct.

- Performance Optimization: Distributing the number of queries across different servers increases the database system’s overall speed and responsiveness.

- Scalability: High availability solutions enable databases to expand out, meeting increased data loads and user needs while maintaining performance.

- Cost efficiency: Prevents the costs of unplanned downtime and data recovery attempts. Investing in HA solutions can lead to considerable long-term cost savings.

In our blog we will set up a step by step process for High Availability in mysql using InnoDB Cluster in CentOS08.

Prerequisites:

Prerequisites:

- setup hostname.

- install mysqlshell in all the three nodes.

Set up hostname entry in /etc/hosts

| 1 2 3 4 5 6 7 8 | sudo hostnamectl set-hostname mysql-01.sys1 sudo hostnamectl set-hostname mysql-02.sys2 sudo hostnamectl set-hostname mysql-03.sys3 vi /etc/hosts ***.***.**.*** mysql-01.sys1 ***.***.**.*** mysql-02.sys2 ***.***.**.*** mysql-03.sys3 |

Install mysqlshell on each node.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 | [root@Master centos]# wget mysql-shell-8.0.38-1.el8.x86_64.rpm --2024-11-08 02:58:58-- http://mysql-shell-8.0.38-1.el8.x86_64.rpm/ Resolving mysql-shell-8.0.38-1.el8.x86_64.rpm (mysql-shell-8.0.38-1.el8.x86_64.rpm)... [root@Master centos]# sudo yum localinstall -y mysql-shell-8.0.38-1.el8.x86_64.rpm Dependencies resolved. ============================================================================================================================================================== Package Architecture Version ============================================================================================================================================================== Installing: mysql-shell x86_64 8.0.38-1.el8 Installing dependencies: python39-libs x86_64 3.9.19-1.module_el8+1051+5bea6413 python39-pip-wheel noarch 20.2.4-9.module_el8+963+a9c12c76 python39-setuptools-wheel noarch 50.3.2-5.module_el8+695+192a31a9 Installing weak dependencies: python39 x86_64 3.9.19-1.module_el8+1051+5bea6413 python39-pip noarch 20.2.4-9.module_el8+963+a9c12c76 python39-setuptools noarch 50.3.2-5.module_el8+695+192a31a9 Enabling module streams: python39 3.9 Transaction Summary ============================================================================================================================================================== Install 7 Packages Total size: 45 M Total download size: 13 M Installed size: 278 M Downloading Packages: (1/6): python39-3.9.19-1.module_el8+1051+5bea6413.x86_64.rpm (2/6): python39-pip-wheel-20.2.4-9.module_el8+963+a9c12c76.noarch.rpm (3/6): python39-pip-20.2.4-9.module_el8+963+a9c12c76.noarch.rpm (4/6): python39-setuptools-wheel-50.3.2-5.module_el8+695+192a31a9.noarch.rpm (5/6): python39-setuptools-50.3.2-5.module_el8+695+192a31a9.noarch.rpm (6/6): python39-libs-3.9.19-1.module_el8+1051+5bea6413.x86_64.rpm -------------------------------------------------------------------------------------------------------------------------------------------------------------- Total Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : Installing : python39-setuptools-wheel-50.3.2-5.module_el8+695+192a31a9.noarch Installing : python39-pip-wheel-20.2.4-9.module_el8+963+a9c12c76.noarch Installing : python39-libs-3.9.19-1.module_el8+1051+5bea6413.x86_64 Installing : python39-3.9.19-1.module_el8+1051+5bea6413.x86_64 Running scriptlet: python39-3.9.19-1.module_el8+1051+5bea6413.x86_64 Installing : python39-setuptools-50.3.2-5.module_el8+695+192a31a9.noarch Running scriptlet: python39-setuptools-50.3.2-5.module_el8+695+192a31a9.noarch Installing : python39-pip-20.2.4-9.module_el8+963+a9c12c76.noarch Running scriptlet: python39-pip-20.2.4-9.module_el8+963+a9c12c76.noarch Installing : mysql-shell-8.0.38-1.el8.x86_64 Running scriptlet: mysql-shell-8.0.38-1.el8.x86_64 Verifying : python39-3.9.19-1.module_el8+1051+5bea6413.x86_64 Verifying : python39-libs-3.9.19-1.module_el8+1051+5bea6413.x86_64 Verifying : python39-pip-20.2.4-9.module_el8+963+a9c12c76.noarch Verifying : python39-pip-wheel-20.2.4-9.module_el8+963+a9c12c76.noarch Verifying : python39-setuptools-50.3.2-5.module_el8+695+192a31a9.noarch Verifying : python39-setuptools-wheel-50.3.2-5.module_el8+695+192a31a9.noarch Verifying : mysql-shell-8.0.38-1.el8.x86_64 Installed: mysql-shell-8.0.38-1.el8.x86_64 python39-3.9.19-1.module_el8+1051+5bea6413.x86_64 python39-libs-3.9.1 python39-pip-20.2.4-9.module_el8+963+a9c12c76.noarch python39-pip-wheel-20.2.4-9.module_el8+963+a9c12c76.noarch python39-setuptools python39-setuptools-wheel-50.3.2-5.module_el8+695+192a31a9.noarch Complete! |

| 1 2 | [root@Master centos]# mysqlsh --version mysqlsh Ver 8.0.38 for Linux on x86_64 - for MySQL 8.0.38 (MySQL Community Server (GPL)) |

Create a new admin account with required permissions

To connect to the MySQL shell and configure the

root@localhost

instance for an InnoDB cluster, let’s create a new admin account with the minimal necessary privileges. In this example, we’ll create a user named

admin123

with the least privileges required for managing the InnoDB cluster.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 | [root@Master centos]# mysqlsh MySQL Shell 8.0.38 Copyright (c) 2016, 2024, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help' or '?' for help; 'quit' to exit. MySQL JS > dba.configureInstance("root@localhost") Configuring local MySQL instance listening at port 3306 for use in an InnoDB cluster... This instance reports its own address as Master:3306 Clients and other cluster members will communicate with it through this address by default. If this is not correct, the report_host MySQL system variable shou ERROR: User 'root' can only connect from 'localhost'. New account(s) with proper source address specification to allow remote connection from all instances mu 1) Create remotely usable account for 'root' with same grants and password 2) Create a new admin account for InnoDB cluster with minimal required grants 3) Ignore and continue 4) Cancel Please select an option [1]: 2 Please provide an account name (e.g: icroot@%) to have it created with the necessary privileges or leave empty and press Enter to cancel. Account Name: admin123 Password for new account: ************ Confirm password: ************ applierWorkerThreads will be set to the default value of 4. NOTE: Some configuration options need to be fixed: +----------------------------------------+---------------+----------------+--------------------------------------------------+ | Variable | Current Value | Required Value | Note | +----------------------------------------+---------------+----------------+--------------------------------------------------+ | binlog_transaction_dependency_tracking | COMMIT_ORDER | WRITESET | Update the server variable | | enforce_gtid_consistency | OFF | ON | Update read-only variable and restart the server | | gtid_mode | OFF | ON | Update read-only variable and restart the server | | replica_parallel_type | DATABASE | LOGICAL_CLOCK | Update the server variable | | replica_preserve_commit_order | OFF | ON | Update the server variable | | server_id | 1 | <unique ID> | Update read-only variable and restart the server | +----------------------------------------+---------------+----------------+--------------------------------------------------+ Some variables need to be changed, but cannot be done dynamically on the server. Do you want to perform the required configuration changes? [y/n]: y Do you want to restart the instance after configuring it? [y/n]: y Creating user admin123@%. Account admin123@% was successfully created. Configuring instance... The instance 'Master:3306' was configured to be used in an InnoDB cluster. Restarting MySQL... NOTE: MySQL server at Master:3306 was restarted. MySQL JS > c admin123@localhost:3306 Creating a session to 'admin123@localhost:3306' Please provide the password for 'admin123@localhost:3306': ************ Save password for 'admin123@localhost:3306'? [Y]es/[N]o/Ne[v]er (default No): y Fetching schema names for auto-completion... Press ^C to stop. Your MySQL connection id is 9 Server version: 8.0.40 Source distribution No default schema selected; type use <schema> to set one. |

Verify the instance configuration on primary account

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | MySQL localhost:3306 ssl JS > dba.checkInstanceConfiguration() Validating local MySQL instance listening at port 3306 for use in an InnoDB cluster... This instance reports its own address as mysql-01.sys1:3306 Clients and other cluster members will communicate with it through this address by default. If this is not correct, the report_host MySQL system variable shou Checking whether existing tables comply with Group Replication requirements... No incompatible tables detected Checking instance configuration... Instance configuration is compatible with InnoDB cluster The instance 'mysql-01.sys1:3306' is valid to be used in an InnoDB cluster. { "status": "ok" } |

Create new MySQL cluster

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | MySQL localhost:3306 ssl JS > dba.createCluster("bynatreecluster") A new InnoDB Cluster will be created on instance 'mysql-01.sys1:3306'. Disabling super_read_only mode on instance 'mysql-01.sys1:3306'. Validating instance configuration at localhost:3306... This instance reports its own address as mysql-01.sys1:3306 Instance configuration is suitable. NOTE: Group Replication will communicate with other members using 'mysql-01.sys1:33061'. Use the localAddress option to override. * Checking connectivity and SSL configuration... Creating InnoDB Cluster 'bynatreecluster' on 'mysql-01.sys1:3306'... Adding Seed Instance... Cluster successfully created. Use Cluster.addInstance() to add MySQL instances. At least 3 instances are needed for the cluster to be able to withstand up to one server failure. <Cluster:bynatreecluster> |

Verify the cluster status

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | MySQL localhost:3306 ssl JS > var cluster = dba.getCluster(); MySQL localhost:3306 ssl JS > cluster.status() { "clusterName": "bynatreecluster", "defaultReplicaSet": { "name": "default", "primary": "mysql-01.sys1:3306", "ssl": "REQUIRED", "status": "OK_NO_TOLERANCE", "statusText": "Cluster is NOT tolerant to any failures.", "topology": { "mysql-01.sys1:3306": { "address": "mysql-01.sys1:3306", "memberRole": "PRIMARY", "mode": "R/W", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.26" } }, "topologyMode": "Single-Primary" }, "groupInformationSourceMember": "mysql-01.sys1:3306" } |

Add instances to the cluster

Adding instance 2 and instance 3 to the cluster

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 | MySQL localhost:3306 ssl JS > cluster.addInstance('admin123@mysql-02.sys2:3306'); The safest and most convenient way to provision a new instance is through automatic clone provisioning, which will completely overwrite the state of 'mysql-02sting cluster member. To use this method by default, set the 'recoveryMethod' option to 'clone'. The incremental state recovery may be safely used if you are sure all updates ever executed in the cluster were done with GTIDs enabled, there are no purged tme GTID set as the cluster or a subset of it. To use this method by default, set the 'recoveryMethod' option to 'incremental'. Incremental state recovery was selected because it seems to be safely usable. WARNING: The instance 'mysql-02.sys2:3306' is only read compatible with the cluster, thus it will join the cluster in R/O mode. Validating instance configuration at mysql-02.sys2:3306... This instance reports its own address as mysql-02.sys2:3306 Instance configuration is suitable. NOTE: Group Replication will communicate with other members using 'mysql-02.sys2:33061'. Use the localAddress option to override. * Checking connectivity and SSL configuration... A new instance will be added to the InnoDB Cluster. Depending on the amount of data on the cluster this might take from a few seconds to several hours. Adding instance to the cluster... Monitoring recovery process of the new cluster member. Press ^C to stop monitoring and let it continue in background. Incremental state recovery is now in progress. * Waiting for distributed recovery to finish... NOTE: 'mysql-02.sys2:3306' is being recovered from 'mysql-01.sys1:3306' * Distributed recovery has finished The instance 'mysql-02.sys2:3306' was successfully added to the cluster. MySQL localhost:3306 ssl JS > cluster.addInstance('admin123@mysql-03.sys3:3306'); The safest and most convenient way to provision a new instance is through automatic clone provisioning, which will completely overwrite the state of 'mysql-03sting cluster member. To use this method by default, set the 'recoveryMethod' option to 'clone'. The incremental state recovery may be safely used if you are sure all updates ever executed in the cluster were done with GTIDs enabled, there are no purged tme GTID set as the cluster or a subset of it. To use this method by default, set the 'recoveryMethod' option to 'incremental'. Incremental state recovery was selected because it seems to be safely usable. WARNING: The instance 'mysql-03.sys3:3306' is only read compatible with the cluster, thus it will join the cluster in R/O mode. Validating instance configuration at mysql-03.sys3:3306... This instance reports its own address as mysql-03.sys3:3306 Instance configuration is suitable. NOTE: Group Replication will communicate with other members using 'mysql-03.sys3:33061'. Use the localAddress option to override. * Checking connectivity and SSL configuration... A new instance will be added to the InnoDB Cluster. Depending on the amount of data on the cluster this might take from a few seconds to several hours. Adding instance to the cluster... Monitoring recovery process of the new cluster member. Press ^C to stop monitoring and let it continue in background. Incremental state recovery is now in progress. * Waiting for distributed recovery to finish... NOTE: 'mysql-03.sys3:3306' is being recovered from 'mysql-02.sys2:3306' * Distributed recovery has finished The instance 'mysql-03.sys3:3306' was successfully added to the cluster. |

Verify the cluster status after adding the instances

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 | MySQL localhost:3306 ssl JS > cluster.status() { "clusterName": "bynatreecluster", "defaultReplicaSet": { "name": "default", "primary": "mysql-01.sys1:3306", "ssl": "REQUIRED", "status": "OK", "statusText": "Cluster is ONLINE and can tolerate up to ONE failure.", "topology": { "mysql-01.sys1:3306": { "address": "mysql-01.sys1:3306", "memberRole": "PRIMARY", "mode": "R/W", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.40" }, "mysql-02.sys2:3306": { "address": "mysql-02.sys2:3306", "memberRole": "SECONDARY", "mode": "R/O", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.40" }, "mysql-03.sys3:3306": { "address": "mysql-03.sys3:3306", "memberRole": "SECONDARY", "mode": "R/O", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.40" } }, "topologyMode": "Single-Primary" }, "groupInformationSourceMember": "mysql-01.sys1:3306" } |

Install MySQL-Router

| 1 2 3 4 5 6 7 | [root@mysql-01 centos]# wget mysql-router-community-8.0.40-1.el8.x86_64.rpm [root@mysql-01 centos]# rpm -ivh mysql-router-community-8.0.40-1.el8.x86_64.rpm warning: mysql-router-community-8.0.40-1.el8.x86_64.rpm: Header V4 RSA/SHA256 Signature, key ID a8d3785c: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:mysql-router-community-8.0.40-1.e################################# [100%] |

Setup new user account for MySQL-Router

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | MySQL localhost:33060+ ssl JS > var cluster=dba.getcluster() Invalid object member getcluster (AttributeError) MySQL localhost:33060+ ssl JS > var cluster = dba.getCluster(); MySQL localhost:33060+ ssl JS > cluster.setupRouterAccount('myrouter') Missing the password for new account myrouter@%. Please provide one. Password for new account: ************ Confirm password: ************ Creating user myrouter@%. Account myrouter@% was successfully created. MySQL localhost:33060+ ssl JS > q Bye! [root@mysql-01 centos]# useradd router_user |

Configure MySQL-Router

Configure MySQL-Router to enable access from application.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | [root@mysql-01 router_user]# mysqlrouter --bootstrap admin123@localhost -d /home/router_user/router --account myrouter --user=router_user Please enter MySQL password for admin123: # Bootstrapping MySQL Router 8.0.40 (MySQL Community - GPL) instance at '/home/router_user/router'... Please enter MySQL password for myrouter: - Creating account(s) (only those that are needed, if any) Failed changing the authentication plugin for account 'myrouter'@'%': mysql_native_password which is deprecated is the default authentication plugin on this - Verifying account (using it to run SQL queries that would be run by Router) - Storing account in keyring - Adjusting permissions of generated files - Creating configuration /home/router_user/router/mysqlrouter.conf # MySQL Router configured for the InnoDB Cluster 'bynatreecluster' After this MySQL Router has been started with the generated configuration $ mysqlrouter -c /home/router_user/router/mysqlrouter.conf InnoDB Cluster 'bynatreecluster' can be reached by connecting to: ## MySQL Classic protocol - Read/Write Connections: localhost:6446 - Read/Only Connections: localhost:6447 ## MySQL X protocol - Read/Write Connections: localhost:6448 - Read/Only Connections: localhost:6449 On /home/router_user/router/ path [root@mysql-01 router]# total 16 drwx------ 2 router_user router_user 116 Nov 8 10:18 data drwx------ 2 router_user router_user 29 Nov 8 10:18 log -rw------- 1 router_user router_user 2012 Nov 8 10:18 mysqlrouter.conf -rw------- 1 router_user router_user 95 Nov 8 10:18 mysqlrouter.key drwx------ 2 router_user router_user 6 Nov 8 10:18 run -rwx------ 1 router_user router_user 306 Nov 8 10:18 start.sh -rwx------ 1 router_user router_user 182 Nov 8 10:18 stop.sh [root@mysql-01 router]# ./start.sh [root@mysql-01 router]# PID 58424 written to '/home/router_user/router/mysqlrouter.pid' stopping to log to the console. Continuing to log to filelog |

Verify replication

verify the replication by creating a database. Here a database with name dummy is created.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | [root@mysql-01 router]# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 1203 Server version: 8.0.40 Source distribution Copyright (c) 2000, 2024, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> show databases; +-------------------------------+ | Database | +-------------------------------+ | Audit_Storage | | Dictionary | | Students | | information_schema | | mysql | | mysql_innodb_cluster_metadata | | performance_schema | | sys | | testdb | | testdb01 | +-------------------------------+ 10 rows in set (0.07 sec) mysql> create database dummy; Query OK, 1 row affected (0.08 sec) mysql> q Bye |

Switch the roles

For HA testing promote secondary nodes to a new primary and verify status of the cluster.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | MySQL localhost:33060+ ssl JS > cluster.setPrimaryInstance('mysql-02.sys2:3306') Setting instance 'mysql-02.sys2:3306' as the primary instance of cluster 'bynatreecluster'... Instance 'mysql-03.sys3:3306' remains SECONDARY. Instance 'mysql-01.sys1:3306' was switched from PRIMARY to SECONDARY. Instance 'mysql-02.sys2:3306' was switched from SECONDARY to PRIMARY. The instance 'mysql-02.sys2:3306' was successfully elected as primary. MySQL localhost:33060+ ssl JS > cluster.status(); { "clusterName": "bynatreecluster", "defaultReplicaSet": { "clusterErrors": [ "Group communication protocol in use is version 8.0.16 but it is possible to upgrade to 8.0.27. Single Consensus Leader can only be enabled after upgrade. Use Cluster.rescan({upgradeCommProtocol:true}) to upgrade." ], "name": "default", "primary": "mysql-02.sys2:3306", "ssl": "REQUIRED", "status": "OK", "statusText": "Cluster is ONLINE and can tolerate up to ONE failure.", "topology": { "mysql-01.sys1:3306": { "address": "mysql-01.sys1:3306", "memberRole": "SECONDARY", "mode": "R/O", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.40" }, "mysql-02.sys2:3306": { "address": "mysql-02.sys2:3306", "memberRole": "PRIMARY", "mode": "R/W", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.40" }, "mysql-03.sys3:3306": { "address": "mysql-03.sys3:3306", "memberRole": "SECONDARY", "mode": "R/O", "readReplicas": {}, "replicationLag": "applier_queue_applied", "role": "HA", "status": "ONLINE", "version": "8.0.40" } }, "topologyMode": "Single-Primary" }, "groupInformationSourceMember": "mysql-02.sys2:3306" } |

Conclusion:

Businesses may use InnoDB Cluster to build an effective and highly available MySQL infrastructure that supports continuous operations and strong disaster recovery. This safeguards that important apps and services remain accessible while protecting data integrity and performance.

Clustering:

Clustering: Prerequisites:

Prerequisites: